Semantic Segmentation

Identify real world features in your scene.

Overview

Semantic segmentation is the process of assigning class labels to specific regions in an image. This richer understanding of the environment can unlock many creative AR features. For example: an AR pet could identify ground to run along, AR planets could fill the sky, the real-world ground could turn into AR lava, and more!

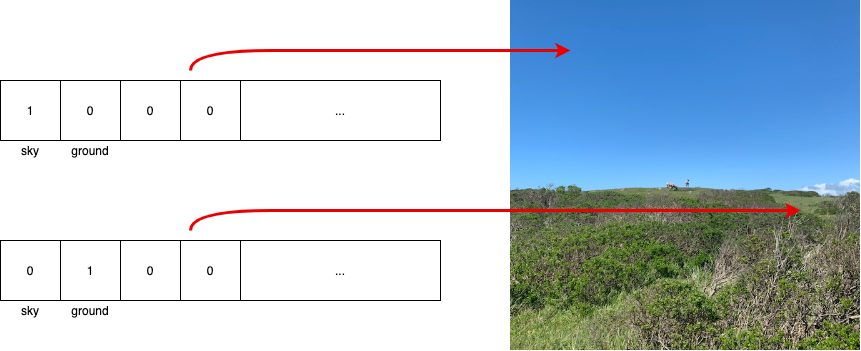

In ARDK, semantic predictions are served as a buffer of unsigned integers for each pixel of the depth map. Each of a pixel’s 32 bits correspond to a semantic class and is either enabled (value is 1) or disabled (value is 0) depending on whether a part of an object in that class is determined to exist at that pixel.

Note

Each pixel can have more than one class label, e.g. Artificial ground and Ground.

Note

You can also obtain pre-thresholded semantic confidence values, such as a pixel having a 80% likelihood that it represents “sky” and a 30% likelihood that it represents “foliage”, using an experimental feature. See Semantic Confidence for more details.

Enabling Semantic Segmentation

First, check whether the device is able to support semantic segmentation. The full list of supported devices is available on ARDK’s System Requirements page.

using Niantic.ARDK.AR.Configuration; var isSupported = ARWorldTrackingConfigurationFactory.CheckSemanticSegmentationSupport();

The value returned here is only a recommendation. Semantic segmentation will run on non-supported devices, but with degraded performance, accuracy, and stability levels.

void RunWithSemantics(IARSession arSession) { var config = ARWorldTrackingConfigurationFactory.Create(); config.IsSemanticSegmentationEnabled = true; // Default/recommended value is 20 to balance resource consumption with performance. config.SemanticTargetFrameRate = 20; arSession.Run(config); arSession.FrameUpdated += OnFrameUpdated; }

Accessing Semantic Data

Semantic data is provided through the IARFrame.Semantics property. Get the most recent frame either through IARSession.CurrentFrame property, or by subscribing to the IARSession.FrameUpdated event.

A few things to keep in mind:

An ARFrame’s

Semanticsvalue will at times be null, like while the algorithm is starting up, or when AR updates come in faster than semantic segmentation updates.

Channel Queries

There are also helper functions in the ISemanticBuffer interface to check if a channel is present:

Use

DoesChannelExistAtto check a specific pixel.Use

DoesChannelExistto check over the entire map.

All channels can be queried using either the channel name or channel index. However, keep in mind that new models released in the future may have different or rearranged semantic channels. It is recommended to use names rather than indices as the order is liable to change. The currently available channel names and order can be verified programmatically, as in the snippet below, or at the bottom of this page.

Creating a Semantic Texture

The raw values in Semantics.Data can be converted into a texture by calling the CreateOrUpdateTextureARGB32 method. A pixel in the texture will be white if the specified channel is present, and black if it is not.

using Niantic.ARDK.AR.Awareness.Semantics; Texture2D _semanticTexture; int _channelIndex = -1; void UpdateSemanticTexture(ISemanticBuffer semantics) { if (_channelIndex < 0) _channelIndex = semantics.GetChannelIndex("sky"); semantics.CreateOrUpdateTextureARGB32 ( ref _semanticTexture, _channelIndex ); }

See Intermediate Tutorial: Semantic Segmentation Textures for an example that gets the semantic texture for a given channel and renders it using an overlay effect.

Note that CreateOrUpdateTextureARGB32 returns the raw buffer data that hasn’t been cropped or aligned to the screen. See the next section for how to deal with this.

Aspect Ratio

The aspect ratio and resolution of the semantic buffer will not necessarily match that of the device camera or screen (those values are determined by the underlying deep-learning model). You can use CopyToAlignedTextureARGB32 to get a semantic texture aligned with the correct dimensions and aspect ratio of the given camera. This does a per-pixel sampling process, which can take some time. Alternatively, get the SamplerTransform from the AwarenessBufferProcessor and use this in a custom shader to apply the cropping and alignment in a single pass. See “Aligning Awareness Buffers with the Screen” in Rendering in ARDK for more details.

The alignment process may result in cropping. Cropping will always lead to data being cut off in the horizontal directions. Instead of cropping vertically, the method will instead add blank space to the right and left in order to avoid losing data.

Using ARSemanticSegmentationManager

To simplify the process of enabling and accessing semantic segmentation data, we provide a Manager you can add to your scene. The API reference and in-code comments/tool tips for ARSemanticSegmentationManager explains how to use it.

Using Semantic Data

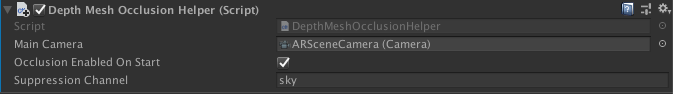

One use case for semantic data is to push back or forward different parts of the environment. Using a ARDepthManager along with a ARSemanticSegmentationManager provides an easy way to suppress (i.e. set to the maximum depth) a semantic channel.

This can be useful, for example, to:

Push back the sky and place a large virtual object far back to create the illusion of the object moving behind city skylines.

Push back the ground can be useful to reduce the chance of noisy/inaccurate depth outputs accidentally occluding your object.

See Occluding Virtual Content page for more details on working with depth and semantic channels.

Available Semantic Channels

The following table lists the current set of semantic channels. Note that the ordering of channels in this list may change with new versions of ARDK, so it is recommended to use names rather than indices in your app. Use the methods and properties from `ISemanticBuffer` such as `GetChannelIndex`, `ChannelCount`, and `ChannelNames` to get the list of channel indices and verify names at runtime.

Index |

Channel Name |

Notes |

|---|---|---|

[0] |

|

Includes clouds. Does not include fog. |

[1] |

|

Includes everything in artificial ground and natural ground (below), and may be more reliable than the combination of the two where there is ambiguity about natural vs artificial. |

[2] |

|

Includes dirt, grass, sand, mud, and other organic / natural ground. Ground with heavy vegetation/foliage won’t be detected as ground and will be detected as foliage instead. |

[3] |

|

Includes roads, sidewalks, tracks, carpets, rugs, flooring, paths, gravel, and some playing fields. |

[4] |

|

Includes rivers, seas, ponds, lakes, pools, waterfalls, some puddles. Does not include drinking water, water in cups, bowls, sinks, baths. Water with strong reflections may not be detected as water and instead be classified as whatever is reflected. |

[5] |

|

Includes body parts, hair, and clothes worn. Does not include accessories or carried objects. Does not distinguish between individuals. Mannequins, toys, statues, paintings, and other artistic expressions of a person are not considered a “person”, though some detections may occur. Photorealistic images of a person are considered a “person”. Model performance may suffer when a person is only partially visible in the image. Some relatively unusual body postures, such as a person crouching or with wide arm extensions, are known to be harder to predict. See Model card: Person Segmentation for details on the underlying model. |

[6] |

|

Includes residential and commercial buildings, modern and traditional. Should not be considered synonymous with walls. |

[7] |

|

Includes bushes, shrubs, leafy parts and trunks of trees, potted plants, flowers. |

[8] |

|

Grassy ground, e.g. lawns, rather than tall grass. |

ARDK also provides several experimental semantic channels that can be used to test or experiment with. See Experimental Semantic Channels.

Semantic Information and Mock Mode

If you want to access semantic data in the Unity Editor, you can use Mock mode. You can use one of several pre-made mock environments available in the ARDK Mock Environments package available on the ARDK Downloads page. Import the Mock Environments package into your scene, then in the ARDK > Virtual Studio window, under the Mock tab, select the prefab you want to use from the Mock Scene dropdown. When you run in Unity play mode, mock mode will automatically instantiate the prefab and the semantic objects will return semantic data for the channels they are set to. See for example ParkPond prefab’s Sky or Trees > Tree > Branches objects.

If none of the pre-made environments meet your needs, you can create your own mock environment. Create a mock AR environment by adding a prefab to your project with the mock environment geometry, along with a MockSceneConfiguration component on the root GameObject in the prefab. Add objects to your prefab that represent any desired semantic channels, for example, a plane that represents “ground”. Add a MockSemanticLabel component to each mock semantic object and set the “Channel” field in the component to the appropriate semantic channel. You can then choose your prefab in the Mock Scene dropdown.

Troubleshooting: Semantics Quality Considerations

The current model specializes in estimating semantics information for objects at eye-level. This means semantics estimation will generally be more accurate if the camera is facing towards the horizon as opposed to more upwards or downwards.